A Deep Look into BrowserStack, CrossBrowserTesting, & SauceLabs

The objective of this post is to get a simple test running in BrowserStack, SauceLabs, and CrossBrowserTesting. In doing so, I want to compare the features and document the experience of running my first test from a trial account.

BrowserStack

BrowserStack includes the following services: Live, Automate, and Screenshots & Responsive.

Live

This allows you to open a VM within the browser in different OS's on different versions of IE, Firefox, Chrome, etc. and manually click test.

This will only sites that are live on the web. To use this on a local server, you'll have to install the Chrome Extension

Automate

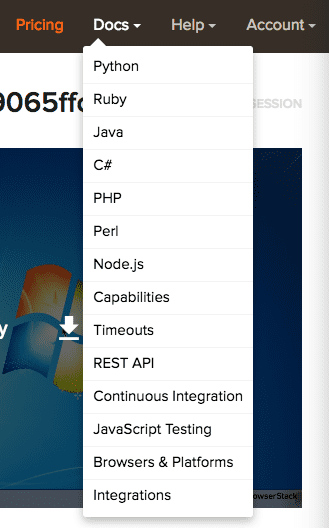

This allows you to run your local selenium tests on BrowserStack. There are a quite a few options depending on which testing tool you'd like to use. When clicking on the Docs tab in the menu, there are docs for setting up in each of the following platforms:

Using the API credentials in Account Settings, you can get a test running on BrowserStack within minutes. If you're testing in ruby, BrowserStack has a repo with some sample tests in rspec, capybara, and cucumber watir.

Using the API credentials in Account Settings, you can get a test running on BrowserStack within minutes. If you're testing in ruby, BrowserStack has a repo with some sample tests in rspec, capybara, and cucumber watir.

When you run your tests on BrowserStack, you have the ability to watch a recording of them running. You can see the test loading a webpage, clicking, filling in forms, etc. If a test is failing, you can try to debug it in the Live tab.

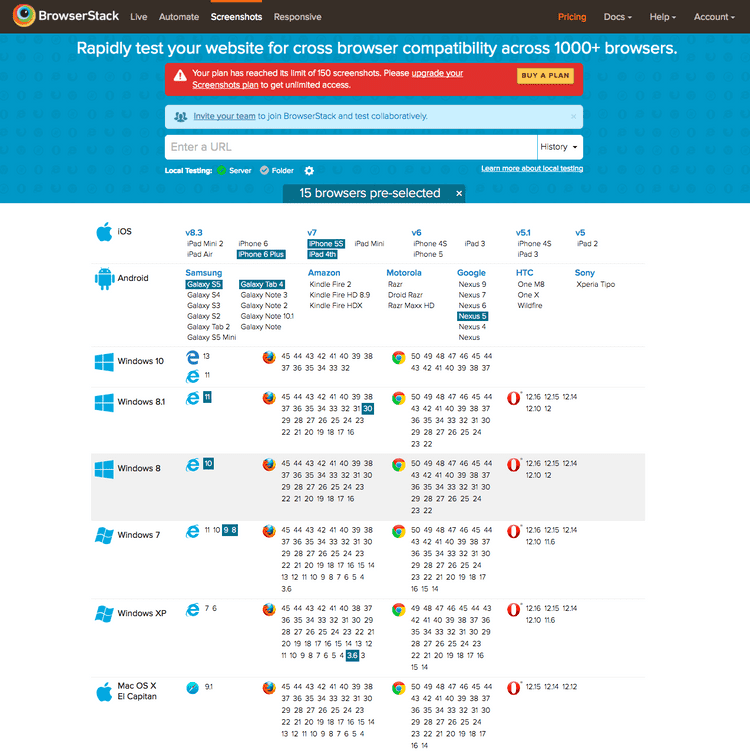

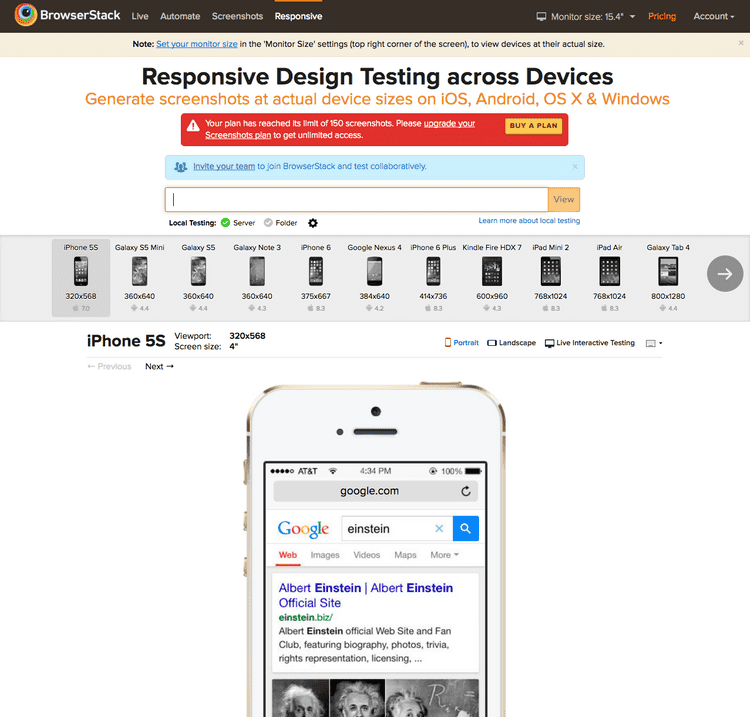

Screenshots & Responsive

This feature allows you to provide a URL where BrowserStack will visit the site with a multitude of operating system & browser combinations.

Example Test

Here's a sample test that logs into PagerDuty and asserts that the h1 text is correct.

require_relative './spec_helper.rb'

require 'selenium-webdriver'

describe "Visiting Sign in page" do

it "loads the form successfully", :run_on_browserstack => true do

@driver.manage.timeouts.implicit_wait = 10

@driver.navigate.to "https://pdt-browserstack.pagerduty.com"

raise "Unable to load PagerDuty." unless @driver.title.include? "PagerDuty"

query = @driver.find_element :id, "user_email"

query.send_keys ENV['username']

query = @driver.find_element :id, "user_password"

query.send_keys ENV['password']

query.submit

expect(@driver.title).to eql("Incidents - PagerDuty")

header = @driver.find_element :css, '.page-header h1'

expect(header.text).to eql("Incidents on All Teams")

end

endYou can see this gist for more details on the driver/browserstack setup. BrowserStack also provides this tool to help you construct your caps config which is a config where you set os, browser, version, etc. You would then enter these values in your driver.

Parallelization

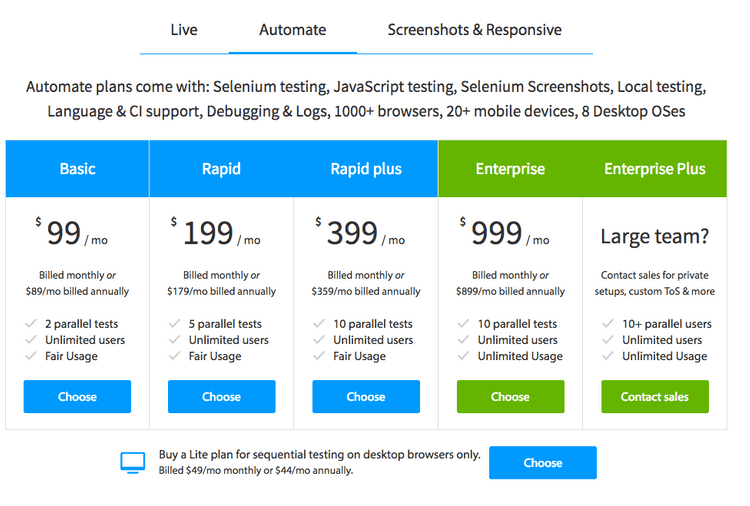

BrowserStack can run up to 10 parallel tests depending on the plan you choose.

Automated Builds

I reached out to BrowserStack and they currently do not have any way to host a test suite and automatically run it. The recommended approach is to have your CI run the BrowserStack tests.

Billing

It seems like billing is based on the feature set. There are different pricing plans if you'd like the Live feature set vs Automate. The plan types are Live, Automate, and Screenshots & Responsive.

Each plan for Automate comes with a limit on how many hours per day you can use, which is their "Fair Policy". Here's the definition: https://www.browserstack.com/question/619

Each plan for Automate comes with a limit on how many hours per day you can use, which is their "Fair Policy". Here's the definition: https://www.browserstack.com/question/619

Closing Thoughts

After playing with BrowserStack for a little over a day, it is a solid tool. The documentation is really good and easy to follow.

The one thing I don't like though is having Live and Automate as separate plans. To me they seem to go hand in hand. When an automated test fails, you will probably want to login to a VM and try the same steps to see why it's failing, which you'd have to pay extra money for if you were on the Automate plan.

I do believe that you would get a certain amount of minutes for Live if you were on Automate. The free plans have minute limitations, but once you run out, you're screwed.

SauceLabs

SauceLabs provides manual and automated testing on what they call "The World's Largest Cloud Based Selenium Grid".

Just like BrowserStack, SauceLabs has the ability to execute your automated tests on their cloud infrastructure and the ability to fire up a VM in different OS/browser combinations and click around.

SauceLabs does not have the feature to take screenshots like BrowserStack does, although you can see screenshots in the individual test runs as well as a recording of the test run.

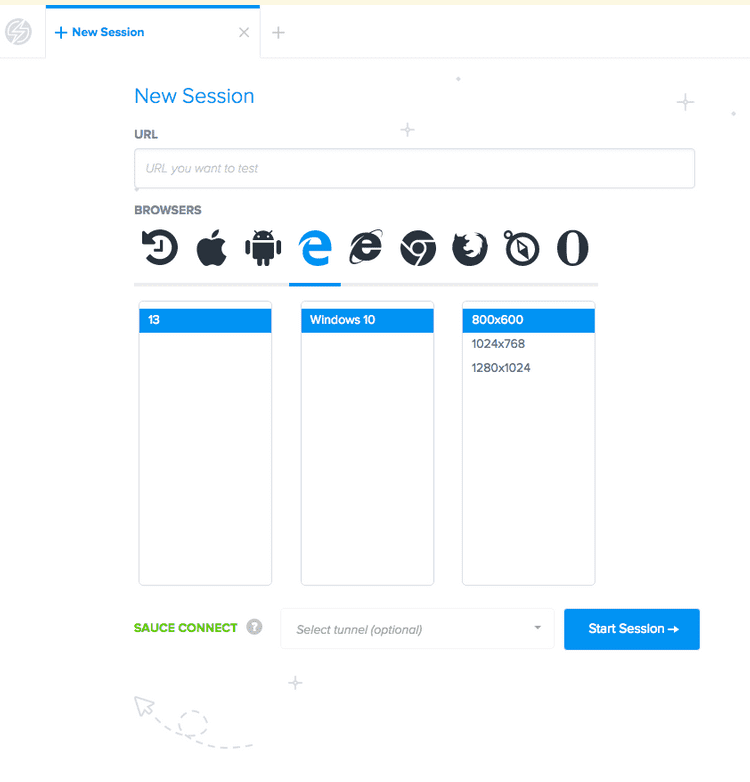

Manual Tests

This is comparable to BrowserStack's Live functionality. To connect to a local server, you'll have to setup a tunnel.

Automated Tests

Getting a test running on SauceLabs is very similar to BrowserStack. You basically just need to grab the access key from your account and plug that in to the driver for your test.

SauceLabs also provides the ability to see a video recording of your test run.

In my opinion, it's a little harder to navigate the docs as it's written wiki style. Things are organized more as guides and how-to's rather than by testing tool/technology.

Example Test

I was able to use the exact same test, but just had to change the driver. You can use this tool to gather the caps config options to enter into your driver.

Here's a gist that runs the same PagerDuty login test, but in SauceLabs.

JIRA Plugin

SauceLabs has a JIRA plugin where it can file a ticket when a build fails. It will prefill the ticket with all of the metadata around the test failture. This is very cool if your team uses JIRA.

Automated Builds

SauceLabs currently has SauceLabs runner in Beta. This is a feature that will allow you to provide SauceLabs with a github repo URL that contains your SauceLabs tests. SauceLabs would then run these whenever you login and manually trigger the build. They also provide a REST Curl command where you can call it from your CI server or what scheduled process you may have. Note that this is still in beta and is only offered to some users.

Parallelization

SauceLabs can run up to 8 concurrent sessions depending on the plan. Or if you choose the Enterprise plan, then the sky's the limit.

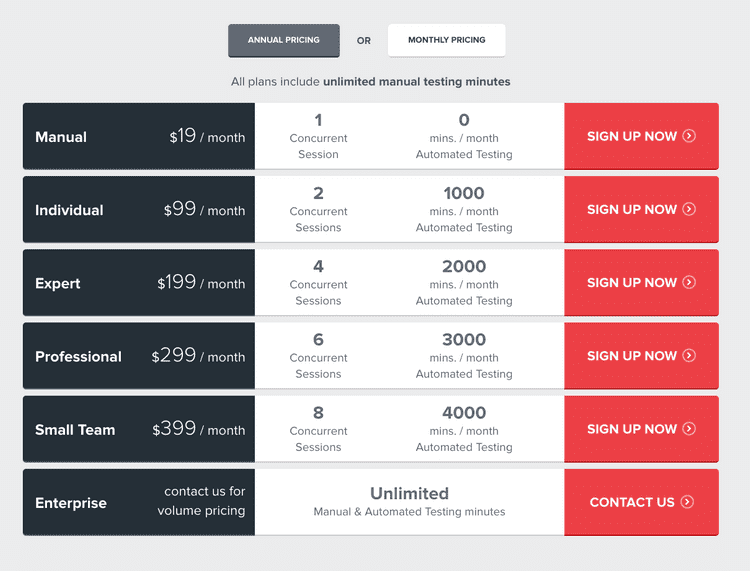

Billing

SauceLabs provides manual and automated testing on all plans, but will limit the amount of concurrent sessions and automated testing minutes based on the plan you choose. The minutes are also on a monthly basis as opposed to daily.

Closing Thoughts

I like SauceLabs and the how the plans include unlimited manual testing. To me, this is a crucial feature to have so that you can troubleshoot why an automated test may be failing.

Sauce Runner will also be a handy feature once released. I'm curious if they will allow a schedule to be set.

The JIRA plugin is also a nice to have. This will allow a smooth workflow when a build fails.

As a developer, I'd be more inclined to choose SauceLabs over BrowserStack, but we still have to check out Cross Browser Testing first.

Cross Browser Testing

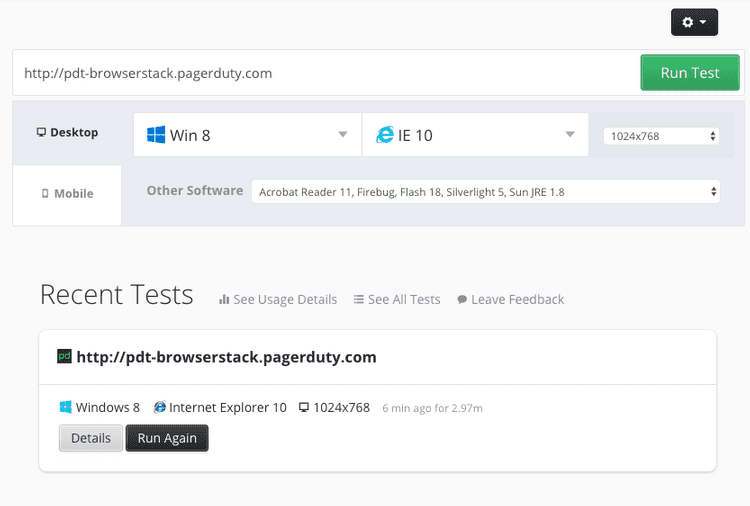

CrossBrowserTesting touts itself by the fact that they use real, physical devices. They have the usual manual testing and automated testing.

The documentation for automated testing is a little thin and somewhat nonexistent. They do provide examples for Python, Java, C#, Ruby, and Javascript, but outside of that, there seems to be a lack of documentation.

The documentation for automated testing is a little thin and somewhat nonexistent. They do provide examples for Python, Java, C#, Ruby, and Javascript, but outside of that, there seems to be a lack of documentation.

CBT also has the ability to take screenshots, which seems comparable to BrowserStack's screenshot functionality.

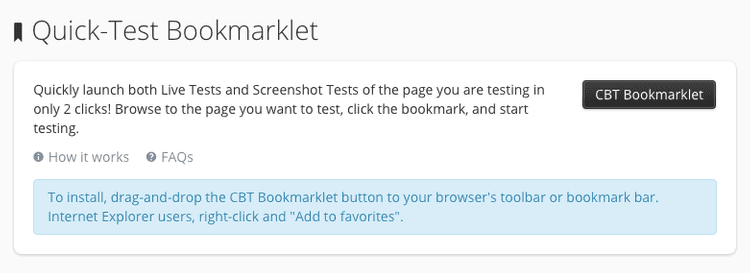

Quick-Test Bookmarklet

CBT has a neat bookmarklet that will allow you to take screenshots in various OS/browser combinations or hop in a live test session (in the OS/browser of your choice) of the current page that you're in. So for example if I was on google.com and I fired the bookmarklet, I can see google on Windows 7 IE 10 assuming I chose that.

Testing against a local server

CBT provides the option to run tests again a local server either by downloading their Chrome extension or using their Java or node.js module in the command line.

Parallelization

CBT can run up to 20 concurrent tests (business plan).

Example Test

Because of the lack of documentation, this test took a little extra time to setup (like knowing which CrossBrowserTesting capabilities are required). There are also some manual things you'd have to do if you want CrossBrowserTesting to know if a suite fails.

Upon failure, you're supposed to send a POST to the score API endpoint and set the score to pass, fail, or unset. Also if you want a screenshot upon failure, you have to send a POST to the screenshot API.

Here's is a gist with an example rspec test logging into PagerDuty on CrossBrowserTesting.

After execution, you can see a video recording of the test run.

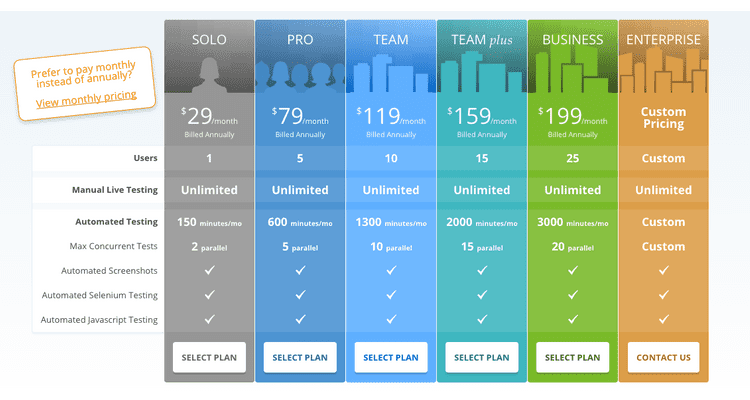

Billing

Their billing model is similar to SauceLabs in that manual testing is unlimited across all plans, but users, automated testing minutes, and concurrent tests are increased as you move up the tiers.

Closing Thoughts

Cross Browser Testing seems like a young product, but if testing with real, physical devices as opposed to VMs is a big deal to you, then this would be a good choice.

The product actually works well and the UX is clean, but the lack of documentation makes me a little nervous if I were to choose CBT.

Benchmarks

Running a simple bash one-liner, I recorded the execution time of each test run. I ran the test 3 times on SauceLabs, CrossBrowserTesting, and BrowserStack. I would've ran it more times but I only get a limited set of minutes on a trial account. Anyways, here are the results:

ServiceExecution Time BrowserStack 23.2s CrossBrowserTesting 41.8s SauceLabs 28.7s The test basically visits a PageDuty sign in page, logs in, and asserts the correct title. The test is run in Windows 7 on IE10.

Take these benchmarks with a grain of salt. Maybe one of the services is having a bad day. I did only run the test 3 times.

Conclusion

I think BrowserStack, SauceLabs, and CrossBrowserTesting are all good in their own right. If I were a UI designer, I would love the screenshot capability that BrowserStack and CBT provide. If you're on a budget, then you also have to pay attention to the fact that BrowserStack charges separately for manual and automated testing. But I would say that BrowserStack is ideal for UI designers because of it's support, documentation, and good product UX.

As a developer, I like SauceLabs and BrowserStack. If you're going to write tests that run in a multitude of browsers/platform combinations then concurrency will reduce the amount of execution time. If I'm concerned with budget however, then I would go with SauceLabs. They seem to provide more for less money, just by comparing their pricing plans (looking at testing minutes and the fact that all plans come with unlimited manual testing).

The only edge that CrossBrowserTesting has is the fact that they run on real, physical devices. This has its drawbacks in speed and parallelization though. If this is what you're looking for, then this is the service for you.